Many times I am brought in to assist in troubleshooting strange things that the client can’t identify easily on their own. In this particular occasion I was assisting in supporting a SharePoint solution and SQL Server kept generating the following 18456 event: “Login failed for user ‘NT AUTHORITY\NETWORK SERVICE’. Reason: Token-based server access validation failed with an infrastructure error. Check for previous errors. [CLIENT: <local machine>]” every minute in the event log. The client was not sure why this was occurring and thought it may have been from an outage they had recently.

A quick web search of the event showed people who had problems with applications accessing a database, but none with this specific account. That is because this is a generic message showing that some account is accessing some database from some computer and doesn’t have the appropriate permissions to do so. Some of that information is provided, however it doesn’t tell us why it is happening. So how do we get more information so that we can suggest the correct path to resolve it?

On the surface, my first impression was that a service was trying to access a database within SQL Server running as the Network Service, and was not permitted to access it. I gathered this from the fact the login was listed as ‘NT AUTHORITY\NETWORK SERVICE’ and the client was defined as local machine, CLIENT: <local machine>. Going with my first thoughts, I opened the Services console and sorted by login to determine the services running as Network Service.

This directed me to what I was pretty sure the problem was. If you look, there are two services related to SQL that were configured to run as Network Service. In addition, the client had all of the other SQL services configured to run with a defined service account, so these were anomalies to not also have been configured in the same manner. While confident this was most likely the source of the event generation, I needed to be sure.

SQL Server Profiler to the rescue!

This is where SQL Server Profiler comes in handy. This is a great tool to give you incite into your SQL environment and what is happening on a transactional basis. You can use it to trace events occurring in SQL to find stored procedures that are having problems, or long running queries, or any number of other problems that you just aren’t sure and need additional view of. In this case, we are looking for failed login attempts.

For this troubleshooting session, I knew that the logged event was only once every minute. This meant that if I configured the trace correctly, I would not be scrolling through a lot of event instances looking for my event. As well, I would not need to capture a lot of data, so outputting the profiler to a database or file wasn’t necessary.

Getting Started: Setting up the Trace.

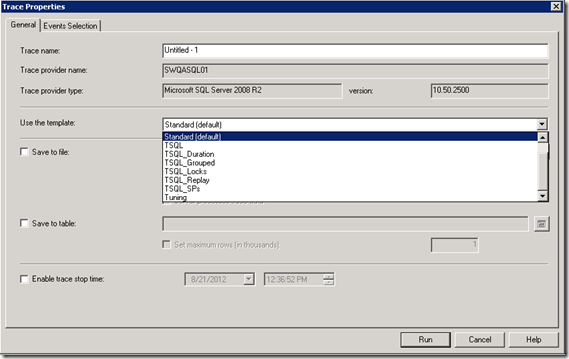

To get started, open the Start Menu and navigate to Microsoft SQL Server 2008 R2 > Performance Tools > SQL Server Profiler (also available from SQL Server Management Studio under the Tools tab). When you first launch SQL Server Profiler, it will prompt you for the trace properties. the first tab (General) defines the initial properties of the trace. The section ‘Use the Template’ is of most interest of us in this troubleshooting. This defines the most probable list of counters and columns that we want to start with for capturing information in the trace. This is because the actual amount of information we can choose from is vast and can be overwhelming if this is your first look into tracing or if you are not a seasoned SQL admin. The additional fields for saving the output to a file/database and trace stop time are not relevant to our isolated troubleshooting. However they can be handy when you are trying to find an intermittent problem and want to run a trace for a long time or have a lot of events you are capturing. Again, not relevant in this particular instance.

To get started, open the Start Menu and navigate to Microsoft SQL Server 2008 R2 > Performance Tools > SQL Server Profiler (also available from SQL Server Management Studio under the Tools tab). When you first launch SQL Server Profiler, it will prompt you for the trace properties. the first tab (General) defines the initial properties of the trace. The section ‘Use the Template’ is of most interest of us in this troubleshooting. This defines the most probable list of counters and columns that we want to start with for capturing information in the trace. This is because the actual amount of information we can choose from is vast and can be overwhelming if this is your first look into tracing or if you are not a seasoned SQL admin. The additional fields for saving the output to a file/database and trace stop time are not relevant to our isolated troubleshooting. However they can be handy when you are trying to find an intermittent problem and want to run a trace for a long time or have a lot of events you are capturing. Again, not relevant in this particular instance.

For this troubleshooting let’s start with the Standard (default) template. Once selected, go to the Events Selection tab. This will show you all the events and columns that are selected to be captured and displayed in the trace.

As you can see, we are capturing a lot of additional data that is probably not relevant to what we are looking for. Namely, we were looking for something associated with logins (remember: “Login failed for user ‘NT AUTHORITY\NETWORK SERVICE’…). With that, I removed the events that I didn’t think would be required. I also unchecked columns of data that I didn’t think would help me once I found the appropriate event (I don’t care about which CPU is being used, or the duration, etc…)

Now I could run this trace as-is, and you can even do so just to see the amount of data being captured and the information in the trace session. However, this will not give me the event I was looking for. This is because my specific event is a failed login. This trace will only show me successful logins and logoffs. So how do we get the data I really want?

Finding Audit Login Failed

First, I select Show all events to show all the possible events that I can trace. From the selection above, you will see that Security Audit has some events already selected.

I want to be more specific however. I unchecked the Audit Login and Audit Logoff events and instead chose Audit Login Failed. This chose all the standard columns but won’t give us all the information we need. For that, I selected Show all Columns.

To troubleshoot I then chose NTUserName, SPID (can’t uncheck that one), ApplicationName, DatabaseName, and Error.

I then clicked Run to start tracing the events. Because this event only triggers once a minute, I only had to wait a short time to see the error captured. As you can see, it was the Report Server (Reporting Services Service) accessing the master database. You can also see that we have the matching 18456 event number.

With that I had the information needed to take back to the client and inquire more as to why this service might have had access removed (not being defined in SQL security), be misconfigured (changed from a specific login to Network Service as in maybe it was recently added as a feature but misconfigured), or if there was some other explanation.

In this case, it turns out that the engineer troubleshooting an earlier problem wasn’t aware as to the state of the services and set SQL Reporting Services and SQL Integration Services from disabled to automatic and started them in an attempt to resolve a SQL problem that they were having. It didn’t solve their problem, but because they didn’t document their troubleshooting (or perform proper analysis as like above) they left those services running and in a state that caused additional work to troubleshoot and resolve.

While this is a very specific incident and resolution, I hope that this quick view into the SQL Server Profiler gives you an additional tool to properly research errors and resolve your problems. For additional information on the tool, please explore this MSDN link : http://msdn.microsoft.com/en-us/library/ms181091.aspx

Jason Condo, MCITP

Principal Consultant, Systems Management and Operations

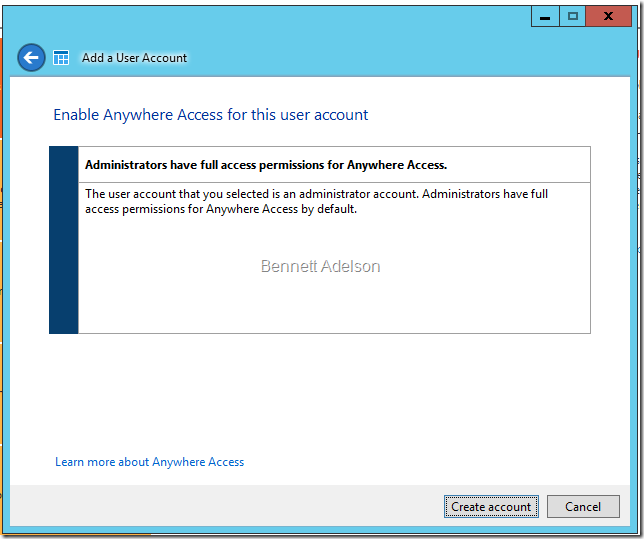

Last week, however, as part of a major alignment of server SKUs, Microsoft

Last week, however, as part of a major alignment of server SKUs, Microsoft

![clip_image001[7] clip_image001[7]](https://bennettadelson.wordpress.com/wp-content/uploads/2012/06/clip_image0017_thumb.png?w=263&h=189)

![clip_image002[8] clip_image002[8]](https://bennettadelson.wordpress.com/wp-content/uploads/2012/06/clip_image0028_thumb.png?w=761&h=481)

![clip_image001[9] clip_image001[9]](https://bennettadelson.wordpress.com/wp-content/uploads/2012/06/clip_image0019_thumb.png?w=447&h=502)

![clip_image002[10] clip_image002[10]](https://bennettadelson.wordpress.com/wp-content/uploads/2012/06/clip_image00210_thumb.png?w=450&h=520)